Using ARM Templates in C# Integration Tests

I'm working on a storage abstraction library Storage.Net and one of the things it promises is identical behavior of different implementation on a storage interface. To achieve this, the project does extensive integration testing on all technologies it touches, and the amount of them is growing, both technologies and tests performed on top. Until recently, this was more or less OK, however as the number of resources required for testing grows, my cloud bills also grow, and I'm almost at the point when I can't afford to pay cloud bills for this free open-source library, therefore there were a few options:

- Find a sponsor that will pay cloud bills for me. This was quickly discarded as people are not keen to contribute to OSS in general, and only to consume it for free.

- Be smarter in terms of consuming cloud resources. This is what I went for and this post describes how I brought down cloud costs almost to zero, while still growing the amount of resources I use.

One of the amazing attributes of a Cloud which we all know but rarely use is that you can created resources on demand and destroy on demand, not to pay for them. In this project, I only need resources to be stood up during integration testing phase, and sometime (very rarely) during development. For the rest of the time they are simply idle. And while storage accounts are incredibly cheap, something like Azure Service Bus or Event Hub can add to your bill considerably, especially when you create more topics/subscriptions/namespaces etc. on demand.

Deciding what you need

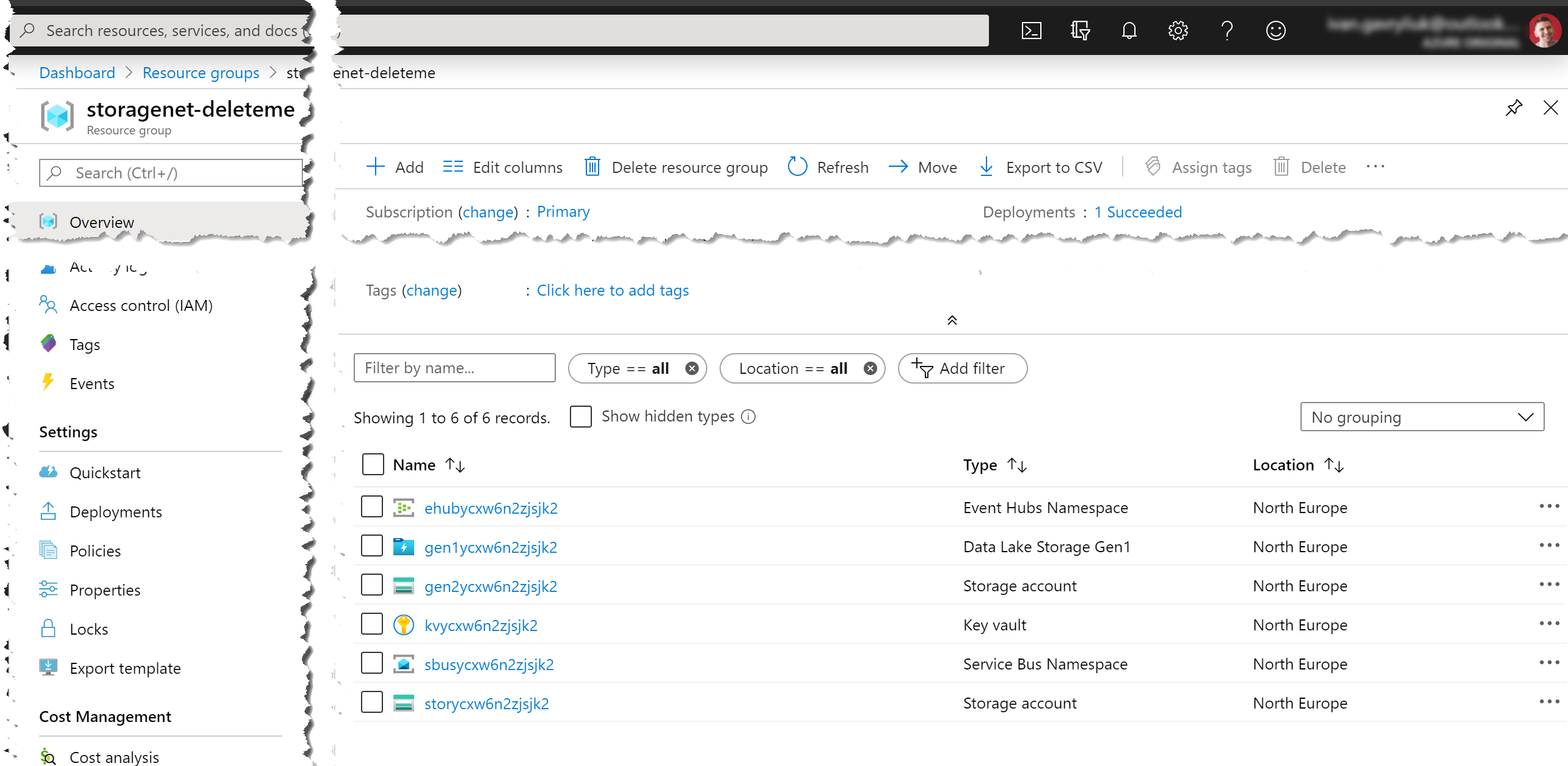

In my case, Storage.Net requires a few basic Azure resources, like:

- Azure Storage Account

- Azure Key Vault

- Azure Data Lake Storage Gen 1

- Azure Data Lake Storage Gen 2

And so on. I'll limit the amount of resources in this sample, so it's easier to comprehend. Let say we just want to create a Storage Account and Data Lake Storage Gen 1 account. And the easiest way to do that would be to define them in an ARM Template, so I'll do just that:

{

"$schema": "https://schema.management.azure.com/schemas/2019-04-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"parameters": {

"tag" : {

"defaultValue": "develop",

"type": "string"

},

"operatorObjectId": {

"defaultValue": "...type here...",

"type": "string"

},

"testUserObjectId": {

"defaultValue": "... type here ...",

"type": "string"

}

},

"variables": {

"storName" : "[concat('stor', uniqueString(parameters('tag')))]",

"gen1Name": "[concat('gen1', uniqueString(parameters('tag')))]"

},

"resources": [

{

"type": "Microsoft.Storage/storageAccounts",

"tags": {

"instance": "[parameters('tag')]"

},

"apiVersion": "2019-04-01",

"name": "[variables('storName')]",

"location": "[resourceGroup().location]",

"sku": {

"name": "Standard_RAGRS",

"tier": "Standard"

},

"kind": "StorageV2",

"properties": {

"networkAcls": {

"bypass": "AzureServices",

"virtualNetworkRules": [],

"ipRules": [],

"defaultAction": "Allow"

},

"supportsHttpsTrafficOnly": true,

"encryption": {

"services": {

"file": {

"enabled": true

},

"blob": {

"enabled": true

}

},

"keySource": "Microsoft.Storage"

},

"accessTier": "Hot"

}

},

{

"type": "Microsoft.DataLakeStore/accounts",

"tags": {

"instance": "[parameters('tag')]"

},

"apiVersion": "2016-11-01",

"name": "[variables('gen1Name')]",

"location": "[resourceGroup().location]",

"properties": {

"firewallState": "Disabled",

"firewallAllowAzureIps": "Disabled",

"firewallRules": [],

"trustedIdProviderState": "Disabled",

"trustedIdProviders": [],

"encryptionState": "Enabled",

"encryptionConfig": {

"type": "ServiceManaged"

},

"newTier": "Consumption"

}

}

],

"outputs": {

"AzureStorageName" : {

"type": "string",

"value": "[variables('storName')]"

},

"AzureStorageKey": {

"type": "string",

"value": "[listKeys(resourceId('Microsoft.Storage/storageAccounts', variables('storName')), providers('Microsoft.Storage', 'storageAccounts').apiVersions[0]).keys[0].value]"

},

"AzureGen1StorageName": {

"type": "string",

"value": "[variables('gen1Name')]"

},

"OperatorObjectId": {

"type": "string",

"value": "[parameters('operatorObjectId')]"

},

"TestUserObjectId": {

"type": "string",

"value": "[parameters('testUserObjectId')]"

}

}

}

There are a few important thins in this template:

Input parameters

I'm passing:

- tag in case I need to create several instances of my resources, independent of each other. Resource names are composed based on the tag.

- operatorObjectId is Active Directory Object ID of an operator (in this case my own account). I will use this all over the place to grant myself automatically permissions to access test resources, as sometime I need to investigate integration test failures manually, that's why.

- testUserObjectId an id of a Service Principal, that will be used by integration tests to access various resources. This is manually created in Active Directory.

Resources

- Resource names are generated in the

variablessection, so I can reference them easier all over the ARM template as you will see later. - Resource locations are taken from the current resource group (

[resourceGroup().location]) to avoid pass more and more parameters.

Outputs

This is the most interesting section, as it outputs the result of ARM script execution. In my case I need to know 5 different variables in order to use them in the integration tests and connect to the created resources.

Using Azure Pipelines

This ARM template is then commited along with source code in the Git repository, so that I can run it from azure pipelines yaml:

- task: AzureResourceManagerTemplateDeployment@3

displayName: 'Deploy Test Azure Resources'

inputs:

deploymentScope: 'Resource Group'

azureResourceManagerConnection: '...'

subscriptionId: '...'

action: 'Create Or Update Resource Group'

resourceGroupName: $(rgName)

location: 'North Europe'

templateLocation: 'Linked artifact'

csmFile: 'infra/azure.json'

deploymentMode: 'Incremental'

deploymentOutputs: 'InfraOutput'

This task will take my resource definition (infra/azure.json), and create or update a resource group creating all the required resources. One of the interesting properties of this task is deploymentOutputs parameter - this is a variable name in Azure Pipelines this task will put the execution results (outputs section). Which means we can use them from the subsequent tasks.

Using the deployment outputs

Now that resources are created, the question is how do we connect to them? Potentially, every run will generate unique resource names, new secrets and so on. Potentially, I could try to pass them as environment variables futher to my integration tests, and that would work. However, I would also like to use those resources locally from my development machine sometimes, to perform some tricky investigations. For instance, if a test fails, I'd like to be able to connect to the resource involved in the failure, and see what exactly was going on, probably by reproducing the test in a debugger.

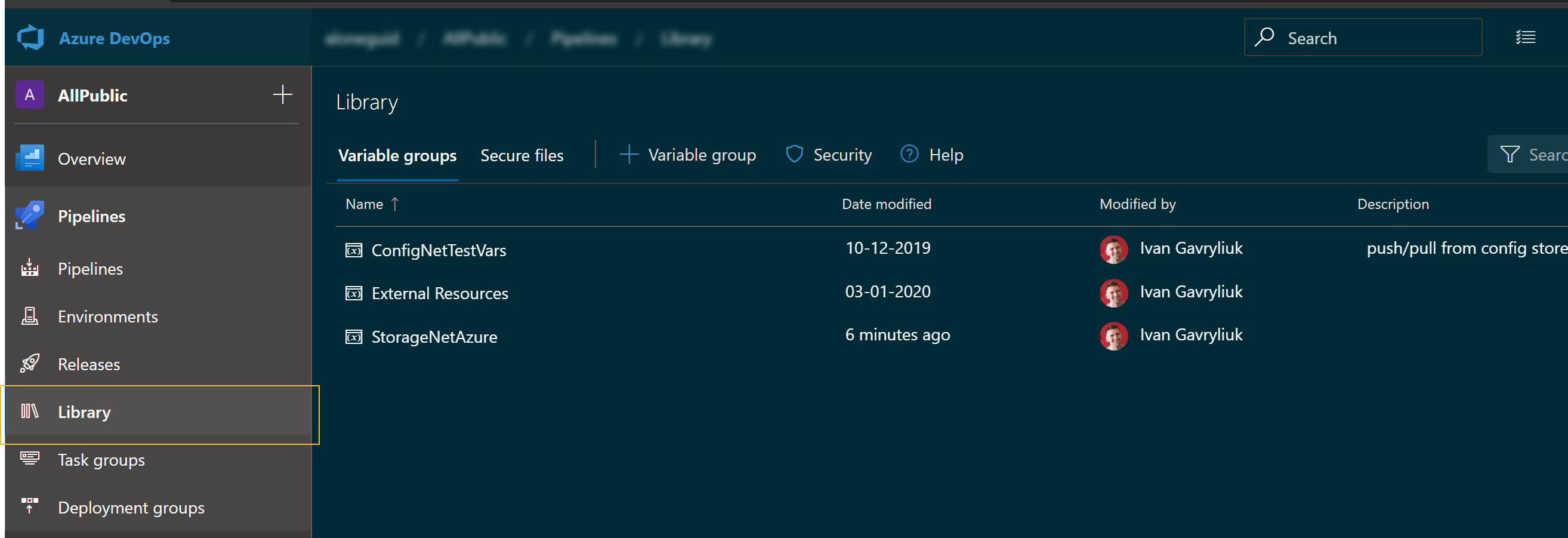

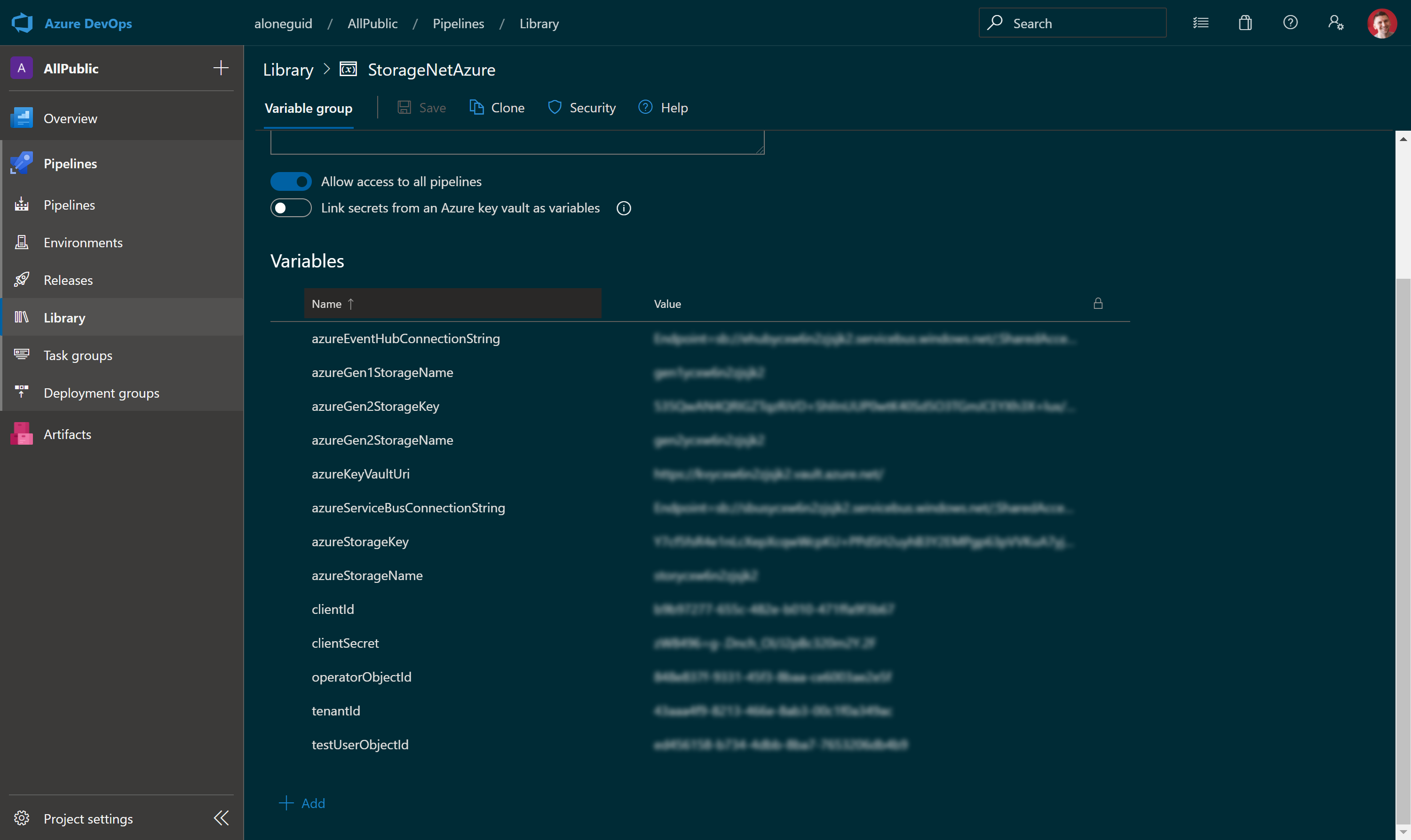

For that reason, I've decided to use Variable Groups feature from Azure Pipelines. You can find them in the Library section:

I've created a new variable group called StorageNetAzure and what I'd like to do is once resources are created to update this variable set with the relevant values. To do that I've written a simple powershell script:

param(

[string] $JsonString,

[string] $Organisation,

[string] $Project,

[string] $GroupId,

[string] $Pat

)

function Get-AzPipelinesVariableGroup(

[string] $Organisation,

[string] $Project,

[string] $GroupId,

[string] $Pat

) {

# Base64-encodes the Personal Access Token (PAT) appropriately

$Base64AuthInfo = [Convert]::ToBase64String([Text.Encoding]::ASCII.GetBytes(("{0}:{1}" -f "", $Pat)))

# GET https://dev.azure.com/{organization}/{project}/_apis/distributedtask/variablegroups/{groupId}?api-version=5.1-preview.1

$vg = Invoke-RestMethod `

-Uri "https://dev.azure.com/$($Organisation)/$($Project)/_apis/distributedtask/variablegroups/$($GroupId)?api-version=5.1-preview.1" `

-Method Get `

-ContentType "application/json" `

-Headers @{Authorization=("Basic {0}" -f $Base64AuthInfo)}

$vg

}

function Set-AzPipelinesVariableGroup(

[string] $Organisation,

[string] $Project,

[string] $GroupId,

[string] $Pat,

$VariableGroup

) {

# Base64-encodes the Personal Access Token (PAT) appropriately

$Base64AuthInfo = [Convert]::ToBase64String([Text.Encoding]::ASCII.GetBytes(("{0}:{1}" -f "", $Pat)))

# PUT https://dev.azure.com/{organization}/{project}/_apis/distributedtask/variablegroups/{groupId}?api-version=5.1-preview.1

$body = $VariableGroup | ConvertTo-Json

Invoke-RestMethod `

-Uri "https://dev.azure.com/$($Organisation)/$($Project)/_apis/distributedtask/variablegroups/$($GroupId)?api-version=5.1-preview.1" `

-Method Put `

-ContentType "application/json" `

-Headers @{Authorization=("Basic {0}" -f $Base64AuthInfo)} `

-Body $body

}

function SetOrCreate(

$VariableSet,

[string]$Name,

[string]$Value

)

{

$vo = $VariableSet.variables.$Name

if($null -eq $vo) {

Write-Host " creating new member"

# create 'value' object

$vo = New-Object -TypeName psobject

$vo | Add-Member value $value

$VariableSet.variables | Add-Member $Name $vo

} else {

Write-Host " updating to $Value"

$vo.value = $Value

}

}

Write-Host "reading var set..."

$vset = Get-AzPipelinesVariableGroup -Organisation $Organisation -Project $Project -GroupId $GroupId -Pat $Pat

Write-Host "vset: $vset"

# enumerate arm output variables and update/create them in the variable set

$Json = ConvertFrom-Json $JsonString

foreach($armMember in $Json | Get-Member -MemberType NoteProperty) {

$name = $armMember.Name

$value = $Json.$name.value

Write-Host "arm| $name = $value"

SetOrCreate $vset $name $value

}

Write-Host "upating var set..."

Set-AzPipelinesVariableGroup -Organisation $Organisation -Project $Project -GroupId $GroupId -Pat $Pat -VariableGroup $vset

It requires a few input parameters to run:

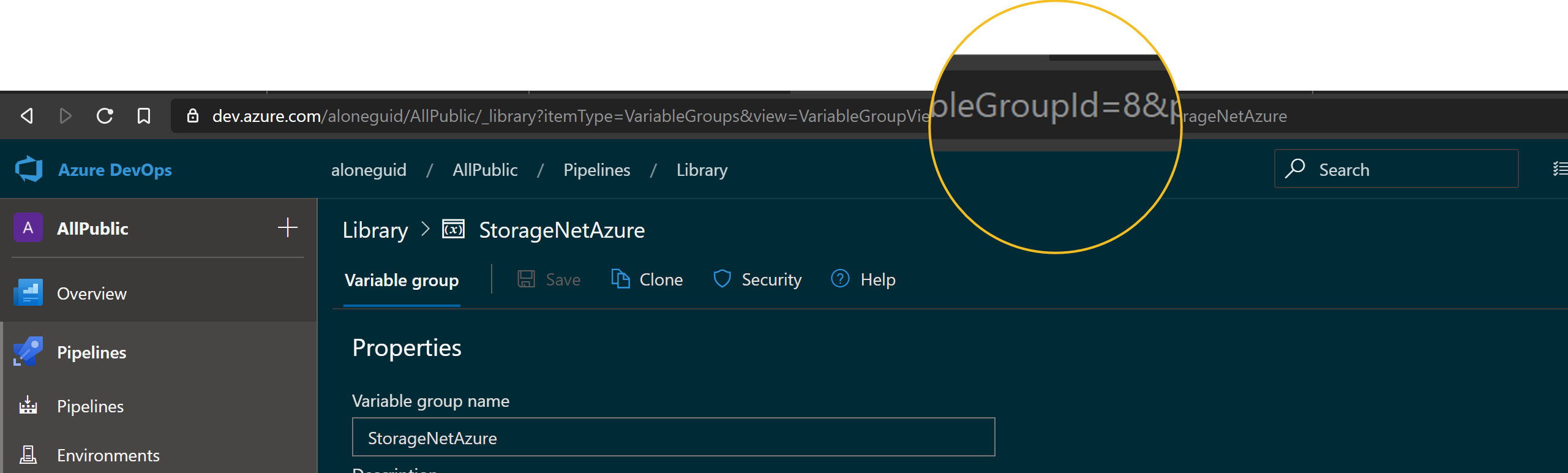

$JsonStringthat is an output from the previous task.$Organisation- name of your Azure DevOps organisation.$Project- name of the project inside the organisation that the pipeline runs in.$GroupId- ID of the variable group.$Pat- personal access token.

The first three are self-explanatory, whereas the last two need a bit of help. To get variable group ID, simply enter it's page, and find the identifier in the URL:

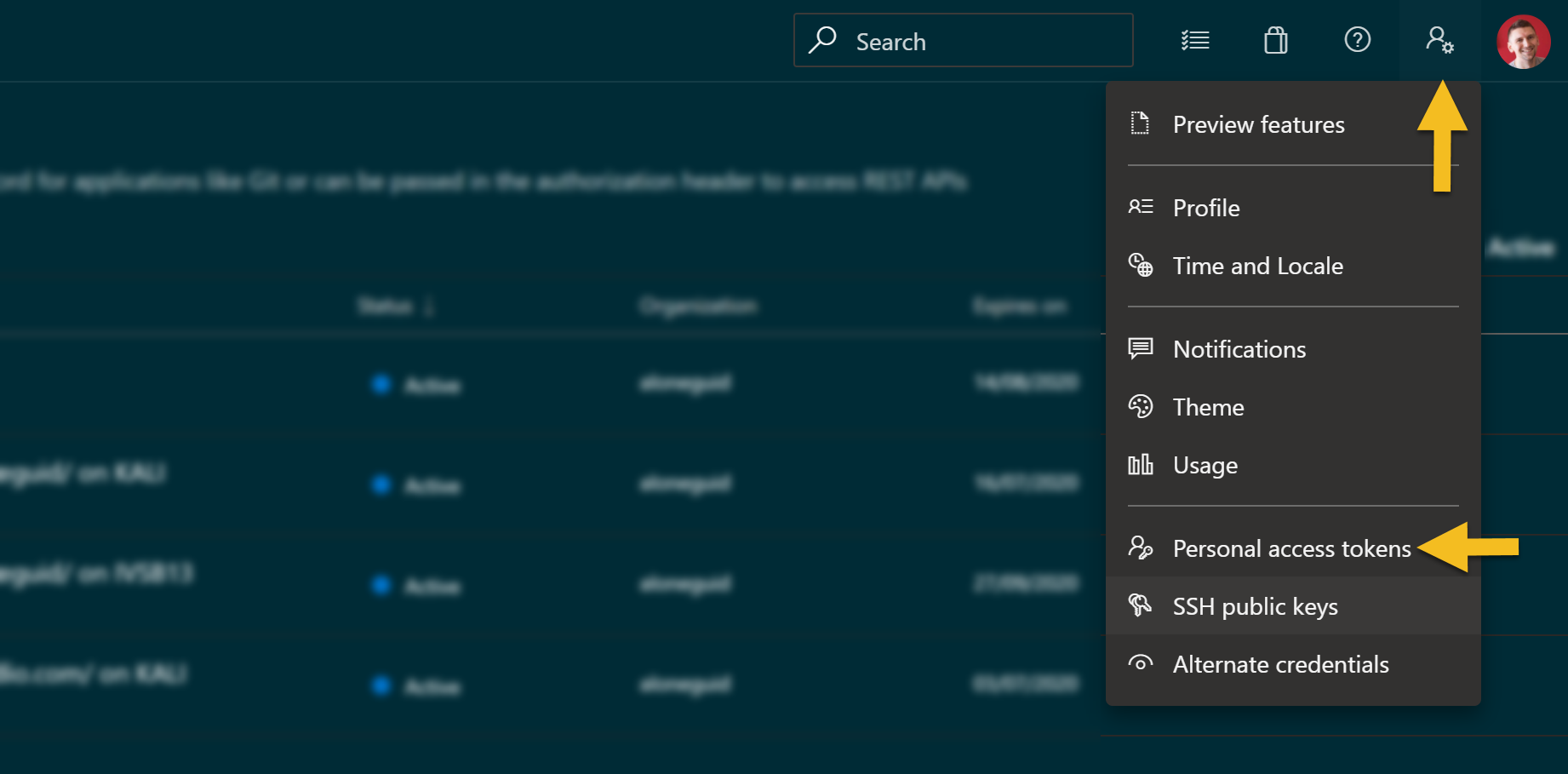

Generating secure PAT Token

To generate PAT token, you can use the appropriate one from Azure DevOps console itself by going to setttings -> Personal access token.

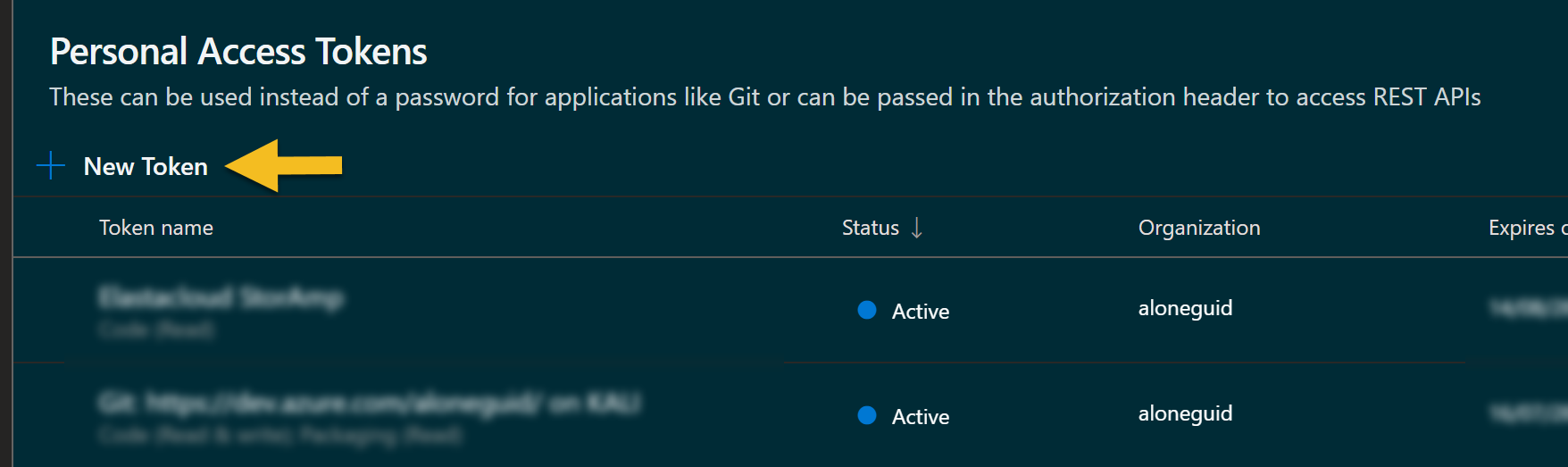

The press "new token"

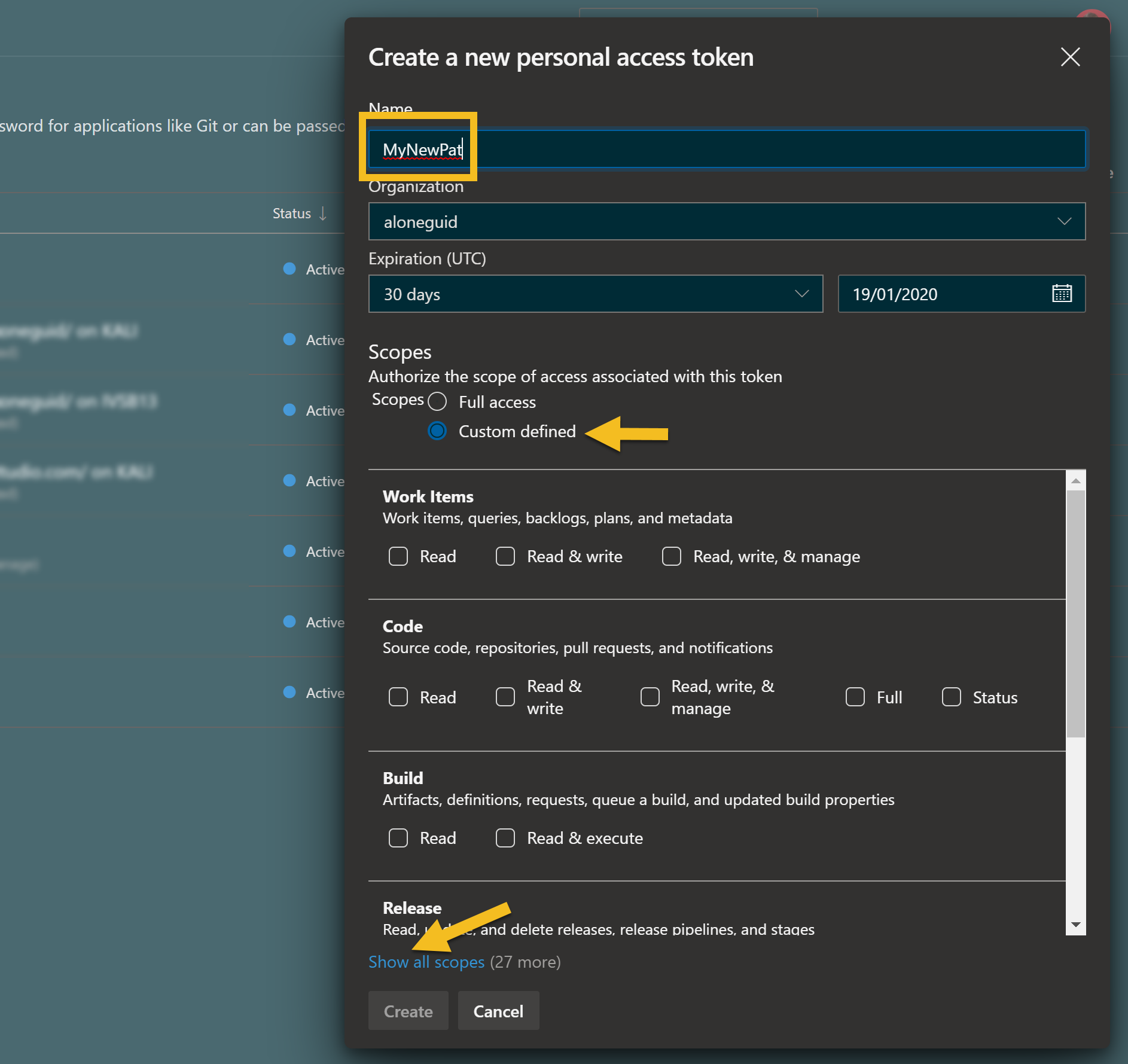

Give it a name, choose expiry period, and as for "scopes", to be sure you are secure, find "Show all scopes".

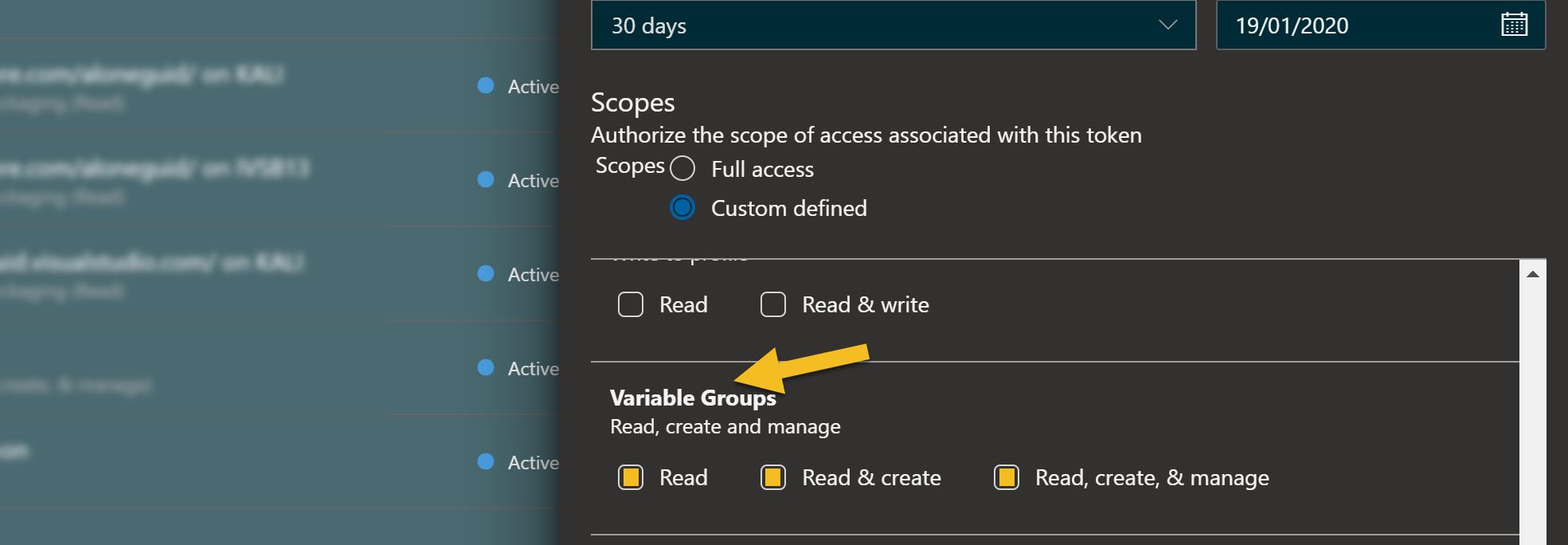

Then find "Variable Groups" and check all boxes.

Only now you can save and get your PAT. This means this specific PAT will only be able to work with variable sets and nothing else, so even if it's stolen there is less risk the pipeline is compromised.

Putting it all together

Once you're ready, you can run this script with azure pipelines straight after the previous task:

- task: PowerShell@2

displayName: 'Transfer Secrets to Variable Set'

inputs:

filePath: 'infra/infraset.ps1'

arguments: '-JsonString ''$(InfraOutput)'' -Organisation ''aloneguid'' -Project ''AllPublic'' -GroupId ''8'' -Pat ''$(Pat)'''

failOnStderr: true

pwsh: true

Well, that's it. If you've done everything correctly, you shoudl see your variable set updated:

Post-configuring resources

Here is another thing. Some of the operations cannot be performed by ARM itslef. For instance, in ADLS Gen 1, in order to grant permissions to the filesystem for a test account, you need to execute a powershell or azure CLI script, therefore some post-configuration may be required. I've achieved this by running another powershell script that again takes the generated output from the resources and applies appropriate actions, for instance:

param(

[string] $JsonString

)

#Import-Module Az.DataLakeStore

$Json = ConvertFrom-Json $JsonString

$Gen1AccountName = $Json.azureGen1StorageName.value

$OperatorObjectId = $Json.operatorObjectId.value

$TestUserObjectId = $Json.testUserObjectId.value

Write-Host "setting permissions for Data Lake Gen 1 ($Gen1AccountName)..."

# fails when ACL is already set

Set-AzDataLakeStoreItemAclEntry -Account $Gen1AccountName -Path / -AceType User `

-Id $OperatorObjectId -Permissions All -Recurse -Concurrency 128 -ErrorAction SilentlyContinue

Set-AzDataLakeStoreItemAclEntry -Account $Gen1AccountName -Path / -AceType User `

-Id $TestUserObjectId -Permissions All -Recurse -Concurrency 128 -ErrorAction SilentlyContinue

To put it all together, this is the complete sequence of scripts I run:

- stage: Integration

dependsOn: []

jobs:

- job: Infra

displayName: 'Build Test Infrastructure'

steps:

- task: AzureResourceManagerTemplateDeployment@3

displayName: 'Deploy Test Azure Resources'

inputs:

deploymentScope: 'Resource Group'

azureResourceManagerConnection: '...'

subscriptionId: '...'

action: 'Create Or Update Resource Group'

resourceGroupName: $(rgName)

location: 'North Europe'

templateLocation: 'Linked artifact'

csmFile: 'infra/azure.json'

deploymentMode: 'Incremental'

deploymentOutputs: 'InfraOutput'

- task: AzurePowerShell@4

displayName: 'Post Configure Resources'

inputs:

azureSubscription: '...'

ScriptType: 'FilePath'

ScriptPath: 'infra/postconfigure.ps1'

ScriptArguments: '-JsonString ''$(InfraOutput)'' -RgName $(rgName)'

FailOnStandardError: true

azurePowerShellVersion: 'LatestVersion'

pwsh: true

- task: PowerShell@2

displayName: 'Transfer Secrets to Variable Set'

inputs:

filePath: 'infra/infraset.ps1'

arguments: '-JsonString ''$(InfraOutput)'' -Organisation ''aloneguid'' -Project ''AllPublic'' -GroupId ''8'' -Pat ''$(Pat)'''

failOnStderr: true

pwsh: true

After the Infra job, other jobs follow which actually run integration tests.

Making variables accessible from integration tests

All my tests are in C#, therefore I need a way to consume that variable set from code. Azure Pipelines has extensive set of APIs to do that, as you've seen in the previous sample with PowerShell, but the best news is that Config.Net .NET library actually supports Azure DevOps Variable Sets! Therefore, in order to configure myself with appropriate values I can configure my integraiton tests in the following way.

First, suppose that all the required parameters (Pat, Org name etc.) are passed as environment variables:

ITestSettings instance = new ConfigurationBuilder<ITestSettings>()

.UseEnvironmentVariables()

.Build();

Now, construct the instance to be used by tests:

instance = new ConfigurationBuilder<ITestSettings>()

.UseAzureDevOpsVariableSet(

_instance.DevOpsOrgName,

_instance.DevOpsProject,

_instance.DevOpsPat,

_instance.DevOpsVariableSetId)

.UseEnvironmentVariables()

.Build();

You can always check out ITestSettings.cs from the original project to get more info on this.

Also, to get original code for the infrastructure bits, check out this github folder and azure-pipelines.yml

Thanks for reading. If you would like to follow up with future posts please subscribe to my rss feed and/or follow me on twitter.